How Not to Be Wrong: The Power of Mathematical Thinking, by Jordan Ellenberg

I know what you are thinking. You don’t need this book. You are already never wrong. I know I am. Math is funny that way.

But you are wrong. You do need this book. It has plenty of examples of people who were wrong simply because they did the math wrong.

This book tries to explain why you should learn mathematics. It gives an introduction to some basic principles and demonstrates what awaits those ignorant of those concepts. The basic principles are not taught by giving you series of eye-blurring equations, but rather some broader concepts.

The book entices with

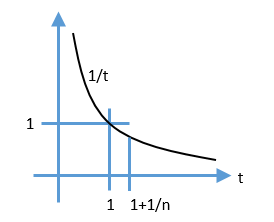

- The myth of if a little of something is good, more is always better

- The Baltimore Stockbroker, or which mutual funds are good?

- How to win the lottery

- Does cancer cause smoking?

The mathematical terms surrounding these ideas are linear vs. nonlinear relationships, inference, expected values, and regression. See, that wasn’t so bad, was it?

The book is similar to Freakonomics, with more emphasis on the math and how results are obtained. Ellenberg likes to point out basic flaws in reasoning that caused people to be wrong, and how math presented the correct way out.

One example early in the book is of Abraham Wald, a brilliant researcher at a classified program during World War II in New York City called SRG (the Statistical Research Group). By way of illustrating how much brain power was situated at SRG, the author says that Milton Friedman (the future Nobel Prize winner in Economics at that time) was often the fourth-smartest person in the room.

One problem that military leaders brought to Wald was “where should they put the armor on planes?” If you put no armor on the planes, they would be shot down easily. On the other hand, if you put too much armor on the planes, they couldn’t fly very far. Thus (an example of a nonlinear relationship), there must be a happy medium in between the extremes that was the “best,” (what mathematicians call the optimum) and they wanted to know what that was.

What they had were the data from planes that made it back from Europe. Obviously, some were shot down, and did not return, so they analyzed the ones that returned and found things like

| Engine |

1.11 bullet holes per square foot |

| Rest of plane |

1.8 bullet holes per square foot |

The generals said, obviously you want to put more armor on the rest of plane, see how many more bullet holes it got, but how much more?

Wald’s conclusion, after his analysis, was actually the reverse. You should “put the armor where the bullets are not”. A simple way to understand this is to imagine this scenario:

Suppose a single bullet in the engine takes a plane down, but large numbers of bullets elsewhere are not harmful. (Mathematicians get to imagine these scenarios when solving problems). In this case, ALL the planes coming back from Europe would have ZERO holes in the engine (because otherwise they would have been shot down) and only holes elsewhere. In that case, you would want to put armor only on the engine, and NONE anywhere else (because it was not critical). This simple type of reasoning, explored in detail (as Ellenberg provides a glimpse at the complex equations Wald used in his report), provided the answer the generals were looking for, albeit in a surprising direction. This reasoning is an example of survivorship bias, which is what happens when you look at samples that are not representative, but instead have a bias. In this case, they were looking at the data for surviving planes only, not all planes. This effect makes evaluating mutuals funds hard, as the “bad” ones may have a tendency to go away.

The book is amusing and entertaining, and well-written. Interspersed with examples are notes about historical figures that bring the examples to life.

Some other examples from the book that stood out in my mind are:

How three teams (including one of M.I.T. students) beat the lottery in Masschusetts. Using math.

How A could be correlated with B, and B correlated with C, and yet A is NOT correlated with C. Yes, you need to read the book. It has to do with the geometric interpretation of correlation.

The story of the Baltimore Stockbroker. I like this story, but I will retell it here as my father told it to me in the 1970s (so it predates the 2008 BBC show The System, which popularized this idea). A tout (tipster) gives our friend three tips as to the winning horse at a racetrack, one each on Monday, Tuesday, and Wednesday. Each one of those turns out to be correct. So on Thursday, when the tout offers to sell the man the name of the winning horse that day for $10,000 he jumps at the chance. What happened? Well, unknown to our friend, on Monday, the tout gave 1000 people tips to a race with 10 horses entered. To 100 he gave horse #1, a second one he gave horse #2, and so on. Then, on Tuesday, he ignored the 900 people who he gave the wrong Monday tip to, and focused on the 100 that got the correct tip. Of those 100, he gave them each a second tip: 10 got horse #1, another 10 got horse #2, and so on. Well, you see what happened. Our friend is the unlucky person that got 3 correct tips in a row. He doesn’t know about the 999 people who got bad advice. And he is going to pay for it.

When Ellenberg tells this story, he uses a stockbroker giving tips, but it’s the same idea.

Sometimes we take for granted some discoveries and inventions from the past, such as what is a bit of information (without which phrases like ’10 megabit download speed’ would be so much gibberish), or what is the correct way to compute probabilities. Ellenberg tries to highlight some historic struggles to find the correct way to address these problems.

One of the major themes of the book is how “math extends common sense.” Perhaps you will find your common sense extended after reading this book.